Data Science Hypothesis Testing

Overview of Data Science Hypothesis Testing

Hypothesis Testing in Data Science

Hypothesis testing is a statistical method used in data science to make inferences about a population based on sample data. It helps to determine whether an observed effect is statistically significant or just due to random chance.

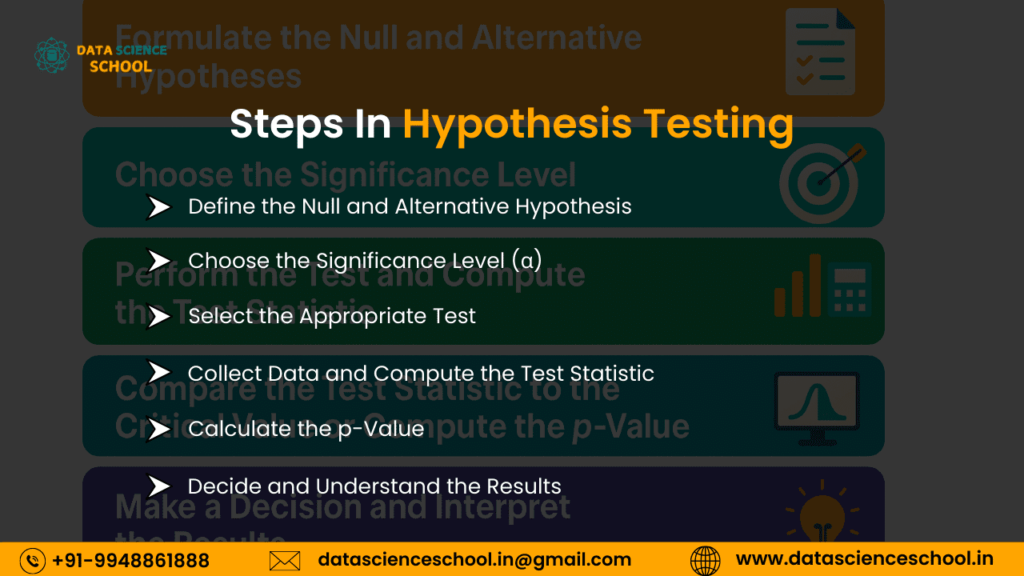

Steps in Hypothesis Testing

Define Null and Alternative Hypothesis

- Null Hypothesis (H₀): Assumes no effect or no difference (status quo).

- Alternative Hypothesis (H₁ or Ha): Assumes a significant effect or difference.

Select Significance Level (α)

- Typically set at 0.05 (5%) or 0.01 (1%), representing the probability of rejecting the null hypothesis when it is actually true (Type I error).

Choose a Statistical Test

- Depends on data type and distribution.

Examples:

- Z-test (for large samples, known variance)

- T-test (for small samples, unknown variance)

- Chi-square test (for categorical data)

- ANOVA (for comparing multiple groups)

- Mann-Whitney U test (for non-parametric distributions)

Calculate Test Statistic and P-value

- Test statistic (Z-score, t-score, etc.) measures how extreme the sample result is.

- P-value: Probability of obtaining the observed result if H₀ is true.

Compare P-value with α

- P-value ≤ α → Reject H₀ (evidence supports H₁).

- P-value > α → Fail to reject H₀ (insufficient evidence for H₁).

Draw Conclusion

- Based on statistical analysis, make an informed decision.

Example: A/B Testing in Data Science

Suppose an e-commerce company tests two website designs (A & B) to check which generates more sales.

- H₀: There is no difference in conversion rates between Design A and Design B.

- H₁: One design has a higher conversion rate than the other.

- Perform a T-test to analyze sample data.

- If p-value < 0.05, the company can confidently conclude that one design performs better.

Common Errors in Hypothesis Testing

- Type I Error (False Positive): Saying H₀ is wrong when it is actually right.

- Type II Error (False Negative): Not rejecting H₀ even though H₁ is true.

Introduction to Data Science Hypothesis Testing

Hypothesis testing is a fundamental statistical technique in data science used to make data-driven decisions. It helps analysts and researchers determine whether a particular assumption about a dataset is valid or if observed patterns are due to chance. By applying hypothesis testing, data scientists can validate models, optimize business strategies, and derive meaningful insights from data.

What is Hypothesis Testing?

Hypothesis testing is a method used in statistics to check if an idea or assumption about data is true. It helps researchers and analysts make decisions based on data instead of guessing.

How Does It Work?

- Start with a Question: Suppose you want to know if a new teaching method helps students score higher on tests.

- Make Two Assumptions:

- Null Hypothesis (H₀): There is no difference (the new method does not improve scores).

- Alternative Hypothesis (H₁): There is a difference (the new method improves scores).

- Null Hypothesis (H₀): There is no difference (the new method does not improve scores).

- Collect Data: Gather test scores from students who used the new method and those who didn’t.

- Perform a Statistical Test: Use a formula to analyze the data and get a result (test statistic and p-value).

- Make a Decision – Compare the p-value with a set threshold (usually 0.05).

- If p ≤ 0.05, reject H₀ (the new method likely improves scores).

Why is Hypothesis Testing Important in Data Science?

Data-driven decisions must be based on statistical evidence rather than assumptions. Hypothesis testing allows data scientists to:

- Validate business or scientific claims with statistical significance.

- Compare different groups, models, or strategies objectively.

- Identify patterns and trends in data while minimizing errors.

- Ensure that conclusions are reliable and not based on random variations.

Basic Concepts in Hypothesis Testing

- Null Hypothesis (H₀): Represents the default assumption or no effect scenario. For example, “Both marketing strategies result in equal sales.”

- Alternative Hypothesis (H₁ or Ha): Represents the opposite of the null hypothesis, indicating a statistically significant effect. For example, “One marketing strategy leads to higher sales than the other.”

- Significance Level (α): The probability threshold (commonly 0.05 or 5%) used to determine whether to reject H₀.

- P-value: The probability of obtaining results as extreme as the observed ones if H₀ is true. If the p-value is smaller than α, we reject H₀.

- Test Statistic: A numerical measure (e.g., t-score, z-score) used to compare the observed data with what is expected under H₀.

Application of Hypothesis Testing in Data Science

- A/B Testing: Comparing two versions of a website, advertisement, or product feature to determine which performs better.

- Machine Learning Model Evaluation: Testing whether a new model significantly improves prediction accuracy.

- Medical Research: Verifying if a new drug has a statistically significant effect compared to a placebo.

- Marketing Analysis: Assessing the impact of different pricing strategies on customer behavior.

Understanding and applying hypothesis testing correctly ensures that data scientists make informed, statistically valid conclusions rather than relying on intuition or incomplete analysis.

Why is Hypothesis Testing Important?

Hypothesis testing is crucial in data science and statistics because it provides a systematic way to make decisions based on data rather than assumptions or intuition. It ensures that conclusions drawn from sample data are statistically valid and not just due to random variations.

Key Reasons Why Hypothesis Testing is Important

Supports Data-Driven Decision Making

- Helps organizations make informed choices based on statistical evidence.

- Reduces reliance on guesswork or intuition.

- Ensures business strategies, medical treatments, or scientific claims are backed by data.

Minimizes Errors and Bias

- Reduces the chances of making incorrect conclusions.

- Controls for Type I Errors (false positives) and Type II Errors (false negatives).

- Ensures fairness in comparing groups or evaluating outcomes.

Validates Scientific and Business Hypotheses

- Used in research studies to test the effectiveness of new drugs, treatments, or theories.

- Helps businesses test marketing strategies, product changes, or operational improvements.

Optimizes Machine Learning Models

- Helps compare different models and determine if one performs significantly better than another.

- Ensures improvements in accuracy, efficiency, and predictive power are statistically valid.

Improves Product and Service Testing

- Used in A/B testing to compare two versions of a product, website, or marketing campaign.

- Helps companies optimize conversion rates, user experience, and profitability.

Ensures Reproducibility and Reliability

- Scientific and business decisions need to be reproducible to be trustworthy.

- Hypothesis testing provides a structured approach to verifying results across different datasets.

By applying hypothesis testing, organizations, scientists, and analysts can confidently make decisions while minimizing the risk of errors.

Key Concepts in Hypothesis Testing

Hypothesis testing is a statistical method used to determine if there is enough evidence in a sample to support a particular claim about a population. To understand hypothesis testing, it’s essential to grasp some fundamental concepts.

1. Null Hypothesis (H₀) vs. Alternative Hypothesis (H₁)

Null Hypothesis (H₀): Represents the assumption that there is no effect, difference, or relationship in the data. It is the default assumption in hypothesis testing.

- Example: There is no difference in average test scores between students who took online and in-person classes.

Alternative Hypothesis (H₁ or Ha): Represents the opposite of the null hypothesis. It means there is an important change, difference, or connection.

- Example: Students who took online classes have higher average test scores than those who attended in-person classes.

Hypothesis testing helps decide if there is enough proof to reject the null hypothesis and support the alternative hypothesis.

2. Significance Level (α) and p-Value

Significance Level (α): A predefined threshold that determines when to reject the null hypothesis. It represents the probability of making a Type I error (false positive). The most commonly used values are:

- α = 0.05 (5%) – There is a 5% chance of incorrectly rejecting H₀.

- α = 0.01 (1%) – A stricter threshold, reducing the likelihood of false positives.

p-Value: The chance of getting the observed results (or more extreme ones) if the null hypothesis is true.

- If p ≤ α, reject H₀ (strong evidence against the null hypothesis).

- If p > α, fail to reject H₀ (insufficient evidence to support H₁).

Example: If a new drug is tested against a placebo and the p-value is 0.03, with α = 0.05, we reject H₀ and conclude that the drug has a statistically significant effect.

3. Type I and Type II Errors

Type I Error (False Positive): Occurs when we incorrectly reject the null hypothesis when it is actually true.

- Example: Concluding that a new medicine is effective when it actually isn’t.

- Probability of Type I error = α (significance level).

Type II Error (False Negative): Occurs when we fail to reject the null hypothesis even though the alternative hypothesis is true.

- Example: Concluding that a new medicine is not effective when it actually works.

- Probability of Type II error = β, and the power of the test is 1 – β (higher power means lower chance of Type II errors).

Concept | Meaning | Example |

H₀ (Null Hypothesis) | No effect or difference | “No difference in customer satisfaction between two service methods” |

H₁ (Alternative Hypothesis) | Significant effect or difference | “One service method leads to higher customer satisfaction” |

Significance Level (α) | Acceptable risk of Type I error | 5% or 1% |

p-Value | The chance of seeing these results if the null hypothesis is correct. | If p ≤ 0.05, reject H₀ |

Type I Error | False positive | Concluding a drug works when it doesn’t |

Type II Error | False negative | Concluding a drug doesn’t work when it does |

Steps in Hypothesis Testing

Hypothesis testing is a structured process that helps determine whether a given claim about a population is supported by sample data. It follows a systematic approach to ensure reliable conclusions.

1. Define the Null and Alternative Hypothesis

- Null Hypothesis (H₀): Assumes no effect, no difference, or no relationship in the population.

- Example: A new drug has the same effectiveness as the existing drug.

- Alternative Hypothesis (H₁ or Ha): It states that there is a change, difference, or connection.

- Example: The new medicine is more effective than the old one.

2. Choose the Significance Level (α)

- The significance level (α) is the probability of rejecting H₀ when it is actually true (Type I error).

- Common values are:

- α = 0.05 (5%) – Standard threshold for most studies.

- α = 0.01 (1%) – Stricter threshold used in critical applications like medical research.

3. Select the Appropriate Test

- The type of test depends on data type, sample size, and study design. Common tests include:

- Z-test: Large samples, known variance.

- T-test: Small samples, unknown variance.

- Chi-Square Test: Categorical data (e.g., surveys, A/B testing).

- ANOVA: Comparing more than two groups.

- Mann-Whitney U Test: Non-parametric alternative to t-test.

4. Collect Data and Compute the Test Statistic

- Gather sample data and calculate the test statistic (e.g., t-score, z-score).

- The test statistic measures how far the sample result is from what is expected under H₀.

5. Calculate the p-Value

- The p-value shows the chance of getting the observed results if the null hypothesis is correct.

- Compare p-value with α:

- If p ≤ α, reject H₀ (strong evidence for H₁).

- If p > α, fail to reject H₀ (insufficient evidence for H₁).

6. Decide and Understand the Results

- Reject H₀: The data provides strong evidence to support H₁.

- Example: The new medicine works much better.

- Fail to Reject H₀: There is not enough evidence to support H₁.

- Example: There is no big difference in how well the drug works.

Example: A/B Testing in Marketing

A company tests two website versions (A & B) to check if one has a higher conversion rate.

- H₀: Both versions have the same conversion rate.

- H₁: One version has a higher conversion rate.

- α = 0.05 (5%)

- A t-test is performed, and the p-value = 0.03.

- Since p < 0.05, the company rejects H₀ and concludes that one version is significantly better.

Common Statistical Tests in Data Science

Statistical tests help data scientists make inferences about a population based on sample data. The choice of test depends on factors such as sample size, data type, and distribution. Below are some of the most commonly used tests in data science.

1. Z-Test

Used when the sample size is big (30 or more) and the population’s variance is known. It usually compares the sample average with the population average.

Example Use Case

A company wants to test whether the average salary of employees in a department is different from the company-wide average salary.

Formula

Z=Xˉ−μσ/nZ = \frac{\bar{X} – \mu}{\sigma / \sqrt{n}}

where:

- Xˉ\bar{X} = sample mean

- μ\mu = population mean

- σ\sigma = population standard deviation

- nn = sample size

When to Use

- Large sample sizes (n≥30n \geq 30)

- Population variance is known

- Normally distributed data

2. T-Test

Used when the sample size is less than 30 and the population variance is not known. It is useful for comparing means.

Types of T-Tests

- One-sample t-test: It compares the average of a sample to a known average of the population.

- Independent two-sample t-test: It compares the averages of two separate groups.

- Paired t-test: Compares means from the same group before and after a treatment.

Formula (for independent two-sample t-test)

t=Xˉ1−Xˉ2s12n1+s22n2t = \frac{\bar{X}_1 – \bar{X}_2}{\sqrt{\frac{s_1^2}{n_1} + \frac{s_2^2}{n_2}}}

where:

- Xˉ1,Xˉ2\bar{X}_1, \bar{X}_2 = sample means

- s12,s22s_1^2, s_2^2 = sample variances

- n1,n2n_1, n_2 = sample sizes

Example Use Case

A researcher wants to compare the effectiveness of two diets on weight loss.

When to Use

- Small sample sizes (n<30n < 30)

- Population variance unknown

- Normally distributed data

3. Chi-Square Test

Used for categorical data to test whether two categorical variables are independent.

Formula

χ2=∑(O−E)2E\chi^2 = \sum \frac{(O – E)^2}{E}

where:

- OO = observed frequency

- EE = expected frequency

Types of Chi-Square Tests

- Chi-square test for independence: Checks if two categorical variables are related.

- Chi-square goodness-of-fit test: Checks if sample data follows an expected distribution.

Example Use Case

A marketing team wants to test if product preference is independent of gender.

When to Use

- Categorical data

- Large sample size (> 5 observations per category)

4. ANOVA (Analysis of Variance)

Used to compare means across three or more groups to determine if at least one group mean is significantly different.

Formula

F=Between-group varianceWithin-group varianceF = \frac{\text{Between-group variance}}{\text{Within-group variance}}

where:

- Between-group variance measures the variation among group means.

- Within-group variance shows how much the data varies inside each group.

Example Use Case

A company wants to compare customer satisfaction scores across three different branches.

When to Use

- Comparing three or more independent groups

- Data is normally distributed

5. F-Test

It checks if two variances are different in a significant way. It is often used before conducting a t-test or ANOVA.

Formula

F=s12s22F = \frac{s_1^2}{s_2^2}

where:

- s12s_1^2, s22s_2^2 = variances of two samples

Example Use Case

A researcher wants to test whether the variance in test scores differs between two student groups before applying a t-test.

When to Use

- Comparing variances of two datasets

- Used as a prerequisite for ANOVA or t-tests

Summary of Common Statistical Tests

Test | Purpose | Data Type | Sample Size Condition |

Z-Test | Compares the sample mean to the population mean | Continuous | Large (n≥30n \geq 30) |

T-Test | Compare means between groups | Continuous | Small (n<30n < 30) |

Chi-Square Test | Test the independence of categorical variables | Categorical | Large (≥ 5 observations per category) |

ANOVA | Compare means of 3+ groups | Continuous | Normally distributed |

F-Test | Compares two variances | Continuous | No strict sample size condition |

How to Choose the Right Hypothesis Test?

Selecting the appropriate hypothesis test depends on various factors, such as the type of data, the number of samples, and the research question. Below is a step-by-step guide to help you choose the right statistical test.

1. Identify the Type of Data

- Numerical (Continuous) Data: Heights, weights, test scores, temperatures, etc.

- Categorical Data: Gender (Male/Female), product preference (A/B), survey responses (Yes/No)

2. Determine the Number of Groups/Samples

- One sample (comparing a sample to a known population).

- Two samples (comparing two independent groups).

- More than two samples (comparing multiple groups).

3. Check Data Distribution

- Normal distribution: Can use parametric tests (Z-test, T-test, ANOVA).

- Non-normal distribution: Use non-parametric tests (Mann-Whitney U test, Kruskal-Wallis test).

4. Consider Variance Assumptions

- If variances are equal → Use standard tests.

- If variances are different → Use alternative versions like Welch’s t-test.

5. Use the Decision Table

Data Type | Comparison Type | Test to Use | Conditions |

Numerical (Continuous) | One sample vs. population mean | Z-test | Large sample (n≥30n \geq 30), known variance |

One-sample t-test | Small sample (n<30n < 30), unknown variance | ||

Two independent samples | Two-sample t-test | Normal distribution, equal variance | |

Mann-Whitney U test | Non-normal distribution | ||

More than two groups | ANOVA | Normal distribution | |

Kruskal-Wallis test | Non-normal distribution | ||

Categorical | Two categorical variables | Chi-Square test | Large sample size |

Two proportions | Z-test for proportions | Independent samples | |

Variance Comparison | Two variances | F-test | Checking equal variances |

Example Scenarios

Scenario 1: A company wants to test if a new training program improves employee productivity.

- Data Type: Continuous (e.g., productivity score).

- Comparison: Pre-training vs. Post-training (same employees).

- Test to Use: Paired t-test (since the data is dependent).

Scenario 2: A medical researcher wants to compare the effectiveness of two drugs.

- Data Type: Continuous (e.g., blood pressure reduction).

- Comparison: Two independent groups (patients using Drug A vs. Drug B).

- Test to Use: Two-sample t-test (if normal) or Mann-Whitney U test (if non-normal).

Scenario 3: A marketing team wants to know if gender influences product preference.

- Data Type: Categorical (e.g., Male/Female vs. Product A/B).

- Comparison: Two categorical variables.

- Test to Use: Chi-Square test (for independence).

Real-World Applications of Hypothesis Testing in Data Science

- Healthcare: Clinical trials compare drug effectiveness (t-tests, ANOVA). Disease prediction examines risk factors (chi-square tests).

- Business & Marketing: A/B testing optimizes ads and websites (Z-tests, t-tests). Sales forecasting evaluates marketing impact.

- Finance: Stock market strategies are tested (t-tests). Loan default prediction uses hypothesis testing in regression models.

- Manufacturing: Quality control assesses defect reduction (chi-square tests). Machine efficiency comparisons use ANOVA.

- Social Sciences: Surveys analyze opinion differences (chi-square tests). Education studies test new teaching methods (paired t-tests).

- Technology: A/B testing evaluates UX changes. Network optimizations test latency improvements.

- Sports Analytics: Training programs and strategies are compared using t-tests.

Tools and Libraries for Hypothesis Testing

SciPy: It offers statistical tests such as t-tests, chi-square tests, and ANOVA.

from scipy import stats

t_stat, p_value = stats.ttest_ind(sample1, sample2)

- Statsmodels: Offers advanced hypothesis testing and regression analysis.

import statsmodels.api as sm

sm.stats.ttest_ind(sample1, sample2)

- NumPy & Pandas: Useful for data preprocessing and basic statistical calculations.

2. R Packages

stats:A built-in tool for t-tests, ANOVA, and chi-square tests.

t.test(sample1, sample2)

- ggplot2: Visualizes test results effectively.

- car: Performs advanced tests like Levene’s test for variance equality.

3. Statistical Software

- SPSS: User-friendly interface for hypothesis testing without coding.

- SAS: Powerful for large-scale statistical analysis in business and healthcare.

Minitab: Common in manufacturing and engineering for quality control tests.

Challenges and Best Practices in Hypothesis Testing

Challenges

- Misinterpreting p-values: A low p-value doesn’t always imply practical significance.

- Type I & Type II Errors: Making a mistake by rejecting or not rejecting the null hypothesis.

- Small Sample Sizes: Can lead to unreliable results and low statistical power.

- Violating Assumptions: Many tests assume normality and equal variances, which may not always hold.

- Multiple Comparisons Issue: Running multiple tests increases the risk of false positives.

Best Practices

- Choose the Right Test: Match the test to the data type and distribution.

- Ensure Adequate Sample Size: Use power analysis to determine a sufficient sample size.

- Check Assumptions: Validate normality and variance equality before applying parametric tests.

- Control for Multiple Testing: Use Bonferroni correction or False Discovery Rate (FDR).

- Interpret Results in Context: Look beyond p-values, considering effect size and confidence intervals.

The Role of Hypothesis Testing in Data-Driven Decisions

Hypothesis testing is essential for making objective, data-driven decisions across various fields. It helps validate assumptions, compare groups, and draw meaningful conclusions with statistical confidence. By selecting the right test, ensuring sufficient sample size, and interpreting results correctly, organizations can minimize errors and enhance decision-making accuracy.

Conclusion on Data Science Hypothesis Testing

Choosing the right hypothesis test depends on:

- The type of data (numerical vs. categorical)

- The number of samples/groups

- The distribution of data (normal vs. non-normal)

- Whether variances are equal (for some tests)

FAQs On Data Science Hypothesis Testing

1. What is hypothesis testing in data science?

Hypothesis testing is a way to check if an idea about a dataset is true or false using sample data. It helps in making data-driven decisions and validating results in various fields like healthcare, business, and finance.

2. What are the key components of hypothesis testing?

- Null Hypothesis (H₀): It assumes nothing changes or there is no difference.

- Alternative Hypothesis (H₁): It indicates an important change or difference.

- Significance Level (α): The cutoff for deciding if a result is important, usually 0.05.

- p-Value: The chance of getting the data if the null hypothesis is correct.

- Test Statistic: A number calculated to compare with critical values or p-values.

3. What are the five key steps in hypothesis testing?

- Define the hypotheses (H₀ and H₁).

- Set the significance level (α).

- Choose and conduct the appropriate statistical test.

- Calculate the test statistic and p-value.

- Make a decision to accept or reject H₀.

4. What are the different types of hypothesis tests?

- Z-Test: For large samples and known variance.

- T-Test: For small samples and unknown variance.

- Chi-Square Test: For categorical data independence.

- ANOVA: Used to compare the averages of more than two groups.

- F-Test: For comparing variances between groups.

5. How are Type I and Type II errors different?

- Type I Error (False Positive): Saying H₀ is wrong when it is actually correct.

- Type II Error (False Negative): Not rejecting H₀ when H₁ is actually correct.

6. How do I choose the right hypothesis test?

- Numerical data (means comparison) → Use T-Test or ANOVA.

- Categorical data (independence check) → Use Chi-Square Test.

- Variance comparison → Use F-Test.

- Small sample size → Use non-parametric tests such as the Mann-Whitney U test.

7. What does the p-value do in hypothesis testing?

The p-value measures the probability of obtaining the observed results if H₀ is true. If p is 0.05 or less, we reject H₀, meaning the result is statistically important.

8. What challenges arise in hypothesis testing?

- Misinterpreting p-values.

- Violating assumptions of normality and equal variance.

- Small sample sizes lead to unreliable results.

- Multiple testing increases false positives.

9. How is hypothesis testing applied in real-world scenarios?

- Healthcare: Testing new drug effectiveness.

- Marketing: A/B testing for ads.

- Finance: Stock market trend analysis.

- Manufacturing: Quality control testing.

10. What are the best practices for hypothesis testing?

- Select the right test based on data type.

- Ensure a sufficient sample size for statistical power.

- Verify assumptions before using parametric tests.

- Use corrections for multiple comparisons (e.g., Bonferroni correction).

- Interpret results beyond just the p-value, considering effect size.

11. What is Hypothesis Testing in Data Science?

Hypothesis testing is a way to make decisions using data by checking assumptions about a group. It helps determine whether an observed effect is due to chance or a true relationship in the data. Common applications include A/B testing, quality control, medical trials, and financial forecasting.

12. What are the 5 Steps of Hypothesis Testing?

- Define Hypotheses: Create the Null Hypothesis (H₀) (no change) and the Alternative Hypothesis (H₁) (important change).

- Set Significance Level (α): Pick a limit (usually 0.05) to decide if the result is significant.

- Select and Conduct a Statistical Test: Use an appropriate test (t-test, ANOVA, chi-square, etc.) based on data type and assumptions.

- Calculate the Test Statistic and p-Value: Calculate the test statistic and compare the p-value with α.

- Make a Decision: If the p-value is 0.05 or less, reject H₀; otherwise, do not reject H₀.