Data Science Libraries In Python

S.No | Name of the library |

1 | |

2 | |

3 | |

4 | |

5 | |

6 | |

7 | |

8 | |

9 | |

10 | |

11 | |

12 | |

13 | |

14 | |

15 | |

16 | |

17 | |

18 | |

19 | |

20 | |

21 | |

22 | |

23 | |

24 | |

25 | |

26 | |

27 | |

28 | |

29 | |

30 | |

31 | |

32 | |

33 | |

34 | |

35 | |

36 | |

37 |

Top 35+ Python Libraries for Data Science: Features, Applications & Uses

- Data science libraries in Python provide pre-built functions for data manipulation, machine learning, and visualization.

- These libraries help automate repetitive tasks, reducing the time and effort required for data analysis.

- They support handling large datasets efficiently, even beyond in-memory limits.

- Libraries for numerical computing enable fast mathematical operations on multi-dimensional arrays.

- Data manipulation libraries allow easy data cleaning, transformation, and analysis.

- Data manipulation libraries allow easy data cleaning, transformation, and analysis.

- Machine learning libraries offer built-in algorithms for classification, regression, and clustering.

- Deep learning frameworks support neural networks for AI applications.

- Statistical computing libraries help in hypothesis testing, probability distributions, and regression analysis.

- Data visualization tools enable the creation of static, animated, and interactive plots.

- Natural language processing libraries provide tools for text processing, sentiment analysis, and language modeling.

- Big data frameworks facilitate parallel computing and distributed data processing.

- Graph-based libraries help analyze relationships in network data structures.

- Libraries for image processing and computer vision allow manipulation, detection, and recognition of images.

- Automation libraries streamline machine learning workflows with minimal coding.

- Web frameworks assist in deploying AI and machine learning models as web applications.

- Time-series analysis tools help in forecasting trends based on historical data.

- Libraries for feature engineering and selection improve the accuracy of machine learning models.

- Data profiling tools generate automated reports for exploratory data analysis (EDA).

- These libraries collectively enhance productivity and efficiency in data science projects.

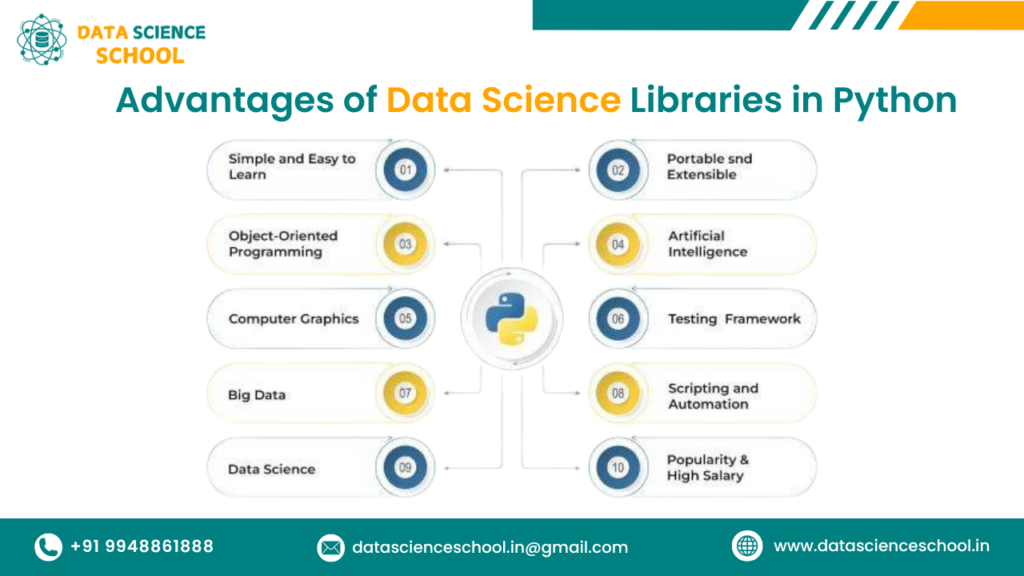

Advantages of Data Science Libraries In Python

- Easy to Use – Python’s libraries have simple syntax and well-documented functions, making them beginner-friendly.

- Open-Source & Free – Most libraries are open-source, meaning they are free to use and continuously improved by the community.

- Versatility – They support various tasks, including data analysis, machine learning, deep learning, visualization, and big data processing.

- Time-Saving – Pre-built functions reduce the need for writing complex algorithms from scratch, improving productivity.

- Scalability – Libraries like Dask and PySpark handle large datasets efficiently with parallel computing.

- Rich Ecosystem – Python has a vast ecosystem of libraries, enabling seamless integration across different data science workflows.

- Machine Learning & AI Support – Libraries such as Scikit-learn, TensorFlow, and PyTorch make building and deploying ML models easier.

- Data Visualization – Tools like Matplotlib, Seaborn, and Plotly help create insightful visualizations for better decision-making.

- Big Data Processing – Libraries like PySpark and Vaex enable efficient processing of massive datasets.

- Statistical & Mathematical Computing – SciPy and Statsmodels provide advanced statistical and scientific computation tools.

- Community Support – A strong global community provides continuous updates, tutorials, and troubleshooting support.

- Cross-Platform Compatibility – Python libraries run on multiple operating systems, including Windows, macOS, and Linux.

- Automation & Deployment – Libraries like FastAPI and Flask allow easy deployment of machine learning models as web applications.

- Performance Optimization – Libraries like NumPy and Pandas optimize performance for faster data manipulation and calculations.

- Integration with Other Technologies – Python’s libraries work well with databases, cloud platforms, and big data frameworks.

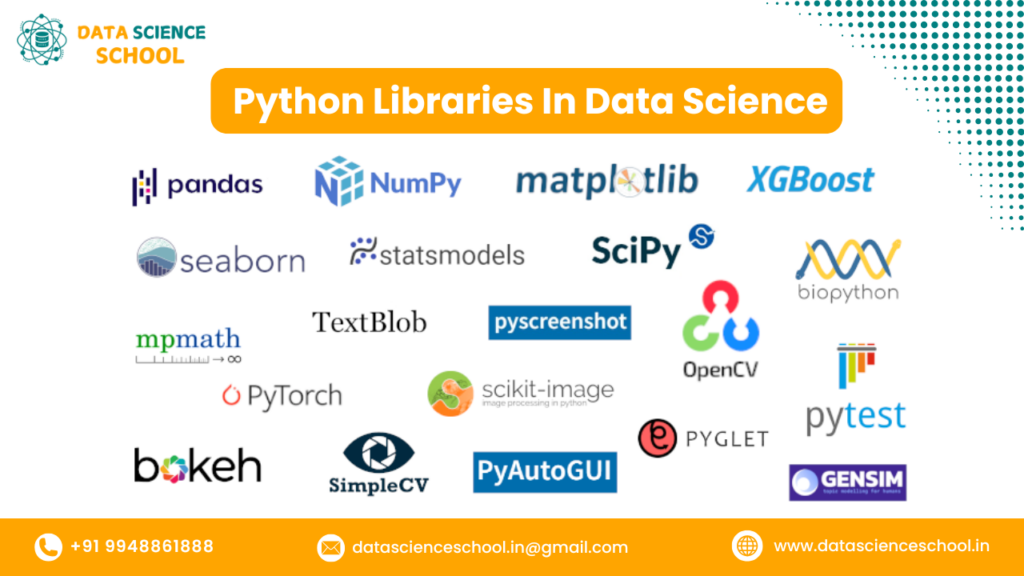

Data Science Libraries In Python

- NumPy

- Pandas

- Matplotlib

- Seaborn

- SciPy

- Scikit-learn

- Keras

- Plotly

- XGBoost

- Scrapy

- LightGBM

- CatBoost

- TensorFlow

- NLTK (Natural Language Toolkit)

- SpaCy

- Statsmodels

- Theano

- PyCaret

- Dask

- Pillow

- Gensim

- H2O.ai

- PyTorch

- Bokeh

- Desk

- GeoPandas

- SymPy

- PySpark

- Vaex

- NetworkX

- Joblib

- FastAPI

- OpenCV

- Pytz

- Pandas-Profiling

- Yellowbrick

- MLlib

Data Science Libraries in Python

NumPy

Description:

NumPy is a fundamental Python library designed for numerical computing, offering support for multi-dimensional arrays and mathematical operations. It provides support for arrays and matrices, along with a wide range of mathematical functions to perform fast and efficient operations on these data structures.

Features:

- N-dimensional array (ndarray): Efficient storage and manipulation of large datasets.

- Mathematical functions: Offers a wide range of functions for operations like linear algebra, statistics, and trigonometry.

- Broadcasting: Enables operations on arrays of different shapes and sizes without replication.

- Integration with C/C++/Fortran: Interfaces with other languages for enhanced performance.

- Random number generation: Useful for simulations and statistical analysis.

Applications:

- Data Analysis: Performing complex numerical computations for data science and scientific research.

- Machine Learning: Handling large datasets and matrix operations for model training and evaluation.

- Scientific Computing: Used in simulations, physics, engineering, and statistical analysis.

- Image Processing: Images represented as arrays for manipulation and transformation.

Pandas

Description:

Pandas is a robust Python library that facilitates data manipulation and analysis, providing efficient data structures like DataFrames for handling and processing structured data.It provides two main data structures, the DataFrame (2D) and Series (1D), making it easy to handle and analyze structured data.

Features:

- DataFrame & Series: Easy-to-use data structures for storing and manipulating data.

- Missing data handling: Offers tools for cleaning and filling missing data.

- Merging & joining: Simplifies combining datasets from multiple sources.

- Groupby & aggregation: Group data and perform operations like mean, sum, or count.

- Time-series support: Powerful tools for resampling, and frequency conversion.

- File I/O: Supports reading from and writing to multiple file formats (CSV, Excel, SQL, etc.).

Applications:

- Data Cleaning: Preprocessing raw data by handling missing values and transforming data.

- Exploratory Data Analysis (EDA): Summarizing and visualizing data to understand patterns.

- Financial Analysis: Analyzing time-series financial data, like stock prices or market trends.

- Machine Learning Pipelines: Data preparation for machine learning tasks.

Matplotlib

Description:

Pandas is a robust Python library that facilitates data manipulation and analysis, providing efficient data structures like DataFrames for handling and processing structured data. It is highly customizable and works well with data structures like NumPy arrays and Pandas DataFrames to produce various types of plots, including line plots, bar charts, histograms, and more.

Features:

- Customizable plots: Offers extensive customization options for colors, labels, axes, legends, etc.

- Wide range of plot types: Supports line charts, scatter plots, bar charts, histograms, pie charts, and more.

- Interactive plotting: Works with Jupyter Notebooks and interactive environments to create dynamic visualizations.

- Integration with other libraries: Easily integrates with Pandas, NumPy, and other Python libraries.

- Export options: Allows saving plots in various formats such as PNG, PDF, SVG, and more.

Applications:

- Data Visualization: Creating high-quality visualizations to explore and analyze data.

- Exploratory Data Analysis (EDA): Quickly visualizing distributions, trends, and relationships in data.

- Scientific Research: Used in academic and research fields for generating publication-ready plots.

- Business Intelligence: Visualizing key metrics and trends in business data.

Seaborn

Description:

Matplotlib is a popular Python library that enables the creation of static, interactive, and animated visualizations, making it essential for data visualization tasks. It offers higher-level interfaces for drawing and customizing visualizations like heatmaps, box plots, and violin plots.

Features:

- Statistical plots: Easily create plots that display statistical relationships, such as regressions and distributions.

- Built-in themes: Includes pre-defined themes and color palettes for attractive visualizations.

- Integration with Pandas: Works seamlessly with Pandas DataFrames, making it easy to plot data directly.

- Support for categorical data: Offers specialized visualizations for categorical data, like box plots, violin plots, and bar plots.

- Enhanced color palettes: Provides high-quality color schemes for better visual clarity and aesthetics.

Applications:

- Data Exploration: Quickly visualize distributions, correlations, and relationships in datasets.

- Statistical Analysis: Create plots that highlight statistical trends, like regression lines or distributions.

- Business Intelligence: Visualize business metrics and trends with clear, appealing graphics.

- Scientific Visualization: Produce informative and attractive plots for research and publications.

SciPy

Description:

SciPy is an open-source Python library designed for scientific and technical computing, providing advanced mathematical, statistical, and optimization functions.Building on NumPy, SciPy extends its capabilities by offering advanced functions for optimization, integration, interpolation, eigenvalue problems, and other complex mathematical operations.

Features:

- Scientific algorithms: Includes modules for optimization, integration, interpolation, and signal processing.

- Sparse matrices: Supports sparse matrix operations for efficient memory usage in large datasets.

- Linear algebra: Provides functions for eigenvalue problems, matrix decompositions, and solving linear systems.

- Special functions: Includes many specialized mathematical functions such as Bessel functions and gamma functions.

- Signal processing: Tools for filtering, frequency analysis, and other signal processing tasks.

Applications:

- Optimization: Solving optimization problems such as finding minimum/maximum values for functions.

- Signal Processing: Used for analyzing and filtering signals in fields like audio and communications.

- Numerical Integration: Applied to solve complex integral equations in scientific research and engineering.

- Machine Learning: Implements algorithms for clustering, dimensionality reduction, and optimization tasks.

- Statistical Analysis: Provides tools for statistical tests and hypothesis testing.

Scikit-learn

Description:

Scikit-learn is a powerful machine learning library in Python that provides simple and efficient tools for data mining and data analysis. It is built on top of NumPy, SciPy, and Matplotlib and is widely used for implementing a variety of machine learning algorithms for classification, regression, clustering, and more.

Features:

- Wide range of algorithms: Supports classification, regression, clustering, dimensionality reduction, and model selection.

- Preprocessing tools: Provides methods for scaling, normalizing, encoding, and transforming data before training models.

- Model evaluation: Includes tools for cross-validation, hyperparameter tuning, and model performance metrics (e.g., accuracy, precision, recall).

- Pipeline support: Enables easy chaining of multiple steps in a machine learning workflow, from data preprocessing to model evaluation.

- Support for various data formats: Works seamlessly with NumPy arrays, Pandas DataFrames, and SciPy sparse matrices.

Applications:

- Machine Learning Models: Building and deploying machine learning models for tasks like classification, regression, and clustering.

- Data Preprocessing: Handling missing values, encoding categorical variables, scaling data, and transforming features.

- Model Evaluation: Comparing multiple models using cross-validation and selecting the best-performing one.

- Dimensionality Reduction: Reducing the number of features while retaining important patterns in data using PCA or other techniques.

- Recommendation Systems: Implementing collaborative filtering and content-based recommendations.

Keras

Description:

Keras is an open-source high-level neural networks API written in Python, designed for fast experimentation. It runs on top of TensorFlow, Theano, or Microsoft Cognitive Toolkit (CNTK), and is used for building and training deep learning models in a user-friendly and modular way.

Features:

- High-level API: Provides simple and intuitive methods to define and train neural networks.

- Modular and extensible: Allows easy addition of custom layers, loss functions, and optimizers.

- Pre-built models: Includes popular pre-trained models such as VGG16, ResNet, and Inception, which can be fine-tuned for specific tasks.

- Support for multiple backends: Can run on top of different deep learning frameworks like TensorFlow, Theano, and CNTK.

- GPU support: Fully compatible with GPU for faster model training.

Applications:

- Deep Learning Models: Building neural networks for tasks like image classification, object detection, and natural language processing (NLP).

- Transfer Learning: Fine-tuning pre-trained models on custom datasets to save time and improve performance.

- Reinforcement Learning: Used for building models that learn through interaction with an environment.

- Time-series Prediction: Applying deep learning models for forecasting and sequence prediction tasks.

- Generative Models: Building models like GANs (Generative Adversarial Networks) for generating new data from learned patterns.

Plotly

Description:

Plotly is a graphing library for creating interactive, web-based visualizations in Python. It supports a wide range of chart types, including statistical, scientific, 3D, and geographic plots. Plotly is designed to create highly customizable and visually appealing plots that can be embedded in web applications or shared online.

Features:

- Interactive plots: Provides interactive charts with features like zooming, panning, and hovering for detailed data inspection.

- Wide range of chart types: Supports a variety of plots, including line charts, scatter plots, bar charts, heatmaps, and 3D visualizations.

- Web-based visualization: Plots can be embedded directly into web applications and dashboards or shared on Plotly’s cloud platform.

- Integration with Pandas: Seamlessly integrates with Pandas DataFrames for easy plotting of data directly from structured datasets.

- Customization: Highly customizable charts with control over colors, markers, axis labels, and more.

Applications:

- Data Visualization: Creating interactive visualizations to explore and present data in an engaging way.

- Business Intelligence: Building interactive dashboards to visualize key business metrics and trends.

- Scientific Visualization: Generating interactive plots for scientific research and publications, especially for complex datasets.

- Geospatial Data Analysis: Plotting geographic data using maps, choropleths, and geospatial visualizations.

- Machine Learning: Visualizing model results, decision boundaries, and performance metrics interactively.

XGBoost

Description:

XGBoost (Extreme Gradient Boosting) is a high-performance, scalable machine learning library that implements gradient boosting algorithms. It is widely used for classification and regression tasks and is known for its efficiency and accuracy, especially in competitive machine learning environments.

Features:

- Gradient Boosting Algorithm: Implements a gradient boosting framework for both classification and regression tasks.

- High performance: Optimized for speed and efficiency, especially on large datasets.

- Regularization: Includes L1 and L2 regularization to prevent overfitting and improve generalization.

- Handling missing data: Built-in support for handling missing values during model training.

- Cross-validation support: Supports automated cross-validation and hyperparameter tuning.

- Parallel processing: Efficiently handles large-scale datasets by utilizing multi-core processors for parallel training.

Applications:

- Classification & Regression: Used for predictive modeling tasks, including classification, regression, and ranking.

- Kaggle Competitions: Popular in data science competitions due to its high performance and flexibility.

- Financial Modeling: Applied in credit scoring, fraud detection, and stock price prediction.

- Recommendation Systems: Used in collaborative filtering for product recommendation.

Scrapy

Description:

Scrapy is an open-source web crawling framework for Python used to extract data from websites. It enables users to efficiently scrape and parse web content for data collection, supporting both simple and complex web scraping tasks.

Features:

- Web crawling: Designed for crawling websites and extracting structured data.

- Data extraction: Provides tools to extract data using XPath or CSS selectors.

- Efficient: Optimized for large-scale web scraping with support for asynchronous requests.

- Built-in storage: Supports output formats like JSON, CSV, and XML for easy data storage.

- Robust and extensible: Allows custom extensions and middlewares to modify the scraping process.

- Handling of dynamic content: Supports handling JavaScript-heavy websites using integration with tools like Splash.

Applications:

- Web Scraping: Extracting data from websites for analysis, research, or data mining purposes.

- Market Research: Gathering data on products, prices, and reviews from e-commerce sites.

- Competitive Intelligence: Monitoring competitors by scraping data from their websites to track prices and other metrics.

- Content Aggregation: Collecting and aggregating content from various sources for publishing or analysis.

LightGBM

Description:

LightGBM (Light Gradient Boosting Machine) is an open-source, distributed gradient boosting framework designed for efficiency and scalability. It is particularly known for its speed in training large datasets and its performance in machine learning tasks, particularly classification and regression.

Features:

- Gradient Boosting: Implements a gradient boosting algorithm optimized for faster training and reduced memory usage.

- Handling Large Datasets: Can efficiently handle large datasets and high-dimensional data with low memory consumption.

- Leaf-wise Tree Growth: Unlike traditional depth-wise growth, LightGBM uses a leaf-wise strategy, which leads to faster and more accurate models.

- Categorical Feature Support: Supports direct handling of categorical features without the need for one-hot encoding.

- Parallel and GPU support: Can leverage parallel computing and GPU acceleration for faster model training.

Applications:

- Classification & Regression: Used for a variety of predictive tasks, including binary classification, multi-class classification, and regression.

- Kaggle Competitions: Popular in data science competitions due to its speed and accuracy.

- Finance: Applied in credit scoring, fraud detection, and stock price prediction.

Customer Segmentation: Used in marketing to group customers based on purchasing behavior or other characteristics.

CatBoost

Description:

CatBoost is an open-source machine learning library developed by Yandex, which specializes in categorical feature handling and efficient gradient boosting. It is designed to provide robust performance in classification, regression, and ranking tasks, with minimal hyperparameter tuning.

Features:

- Categorical Feature Handling: Automatically handles categorical features without the need for encoding, such as one-hot encoding.

- Gradient Boosting: Implements gradient boosting with optimizations for better speed and accuracy.

- Overfitting Control: Includes built-in mechanisms for overfitting prevention, such as early stopping and model regularization.

- Efficiency: Offers fast training times and reduced memory usage, even with large datasets.

- Cross-validation: Supports built-in cross-validation functionality for model evaluation.

Applications:

- Classification & Regression: Applied for various machine learning tasks like binary and multi-class classification, and regression.

- Recommender Systems: Used in building recommendation models for products or services.

- Natural Language Processing (NLP): Applied in text classification, sentiment analysis, and other NLP tasks.

- Customer Churn Prediction: Used to predict customer churn based on historical behavior and features.

TensorFlow

Description:

TensorFlow is an open-source framework for machine learning and deep learning, developed by Google, designed to simplify the development and deployment of AI models. It is widely used for building, training, and deploying machine learning models, particularly deep learning models such as neural networks.

Features:

- Deep Learning: Excellent for building deep learning models like neural networks, CNNs, and RNNs.

- Flexible Architecture: Supports both CPU and GPU computation, making it suitable for scaling models across multiple devices.

- Cross-platform compatibility: Works across platforms, including desktops, mobile devices, and cloud environments.

- High-level APIs: Includes Keras for easy and rapid model development.

- Ecosystem tools: Offers TensorFlow Lite (for mobile), TensorFlow.js (for browser), and TensorFlow Extended (for production pipelines).

Applications:

- Image Classification: Used for building models that classify images in computer vision tasks.

- Natural Language Processing: Applied in language models, text generation, and sentiment analysis.

- Speech Recognition: Enables voice recognition and transcription tasks.

- Reinforcement Learning: Used in applications like robotics and gaming that require decision-making models.

Healthcare: Helps in medical image analysis, such as tumor detection and diagnosis.care

NLTK (Natural Language Toolkit)

Description:

NLTK is a comprehensive library for natural language processing (NLP) in Python. It provides tools for working with human language data, including text preprocessing, tokenization, and linguistic structure analysis.

Features:

- Text Preprocessing: Includes tools for tokenization, stemming, lemmatization, and part-of-speech tagging.

- Corpora and Lexicons: Access to multiple datasets and word lexicons for linguistic analysis.

- NLP algorithms: Implements algorithms for classification, clustering, and parsing text data.

- Text Classification: Supports supervised machine learning methods for text classification.

- WordNet Integration: Provides access to WordNet for working with word definitions, synonyms, and antonyms.

Applications:

- Sentiment Analysis: Analyzing the sentiment of text data from sources like social media or reviews.

- Text Classification: Categorizing text into different predefined classes (e.g., spam vs. ham).

- Information Retrieval: Extracting relevant information from unstructured text.

- Chatbots: Building rule-based or machine learning-based conversational agents.

- Language Translation: Applied in machine translation tasks to translate text between languages.

SpaCy

Description:

SpaCy is an open-source NLP library designed for efficient and fast text processing. It focuses on performance and ease of use, offering industrial-strength NLP tools for tasks like tokenization, parsing, and entity recognition.

Features:

- Fast and efficient: Optimized for speed and memory usage, processing large volumes of text quickly.

- Pre-trained models: Includes pre-trained models for various languages for tasks like part-of-speech tagging, named entity recognition, and dependency parsing.

- Support for deep learning: Integrates with deep learning frameworks like TensorFlow and PyTorch for advanced NLP tasks.

- Text Preprocessing: Offers efficient methods for tokenization, lemmatization, and entity extraction.

- Pipeline support: Provides a flexible and customizable NLP pipeline for building complex workflows.

Applications:

- Named Entity Recognition (NER): Identifying and classifying entities in text (e.g., person names, organizations, dates).

- Text Classification: Categorizing text into topics, genres, or other categories.

- Part-of-Speech Tagging: Identifying grammatical components in text (e.g., nouns, verbs, adjectives).

- Machine Translation: Assisting in language translation tasks using NLP models.

- Chatbots: Building conversational agents that can understand and respond to user inputs.

Statsmodels

Description:

Statsmodels is a Python library for statistical modeling and hypothesis testing. It provides tools for estimating statistical models, performing tests, and exploring data through detailed summaries and diagnostics.

Features:

- Statistical Models: Includes tools for linear regression, generalized linear models, time-series analysis, and more.

- Hypothesis Testing: Provides a wide range of statistical tests for various models.

- Data Exploration: Offers tools for descriptive statistics, visualization, and correlation analysis.

- Diagnostic Tools: Includes functionality for model diagnostics, residuals analysis, and model performance evaluation.

- Support for time-series: Supports autoregressive models, ARIMA, and other time-series techniques.

Applications:

- Econometrics: Analyzing economic data and developing predictive models.

- Time-Series Analysis: Forecasting and modeling time-series data for financial and scientific applications.

- Statistical Inference: Conducting hypothesis testing and estimating parameters in statistical models.

- Social Sciences: Used in fields like sociology and psychology for analyzing survey data and experiments.

Theano

Description:

Theano is an open-source Python library that provides a deep learning framework for defining, optimizing, and evaluating mathematical expressions, especially those involving large amounts of data. It is used for building neural networks and optimizing computational graphs.

Features:

- Deep Learning: Facilitates the development of neural networks by efficiently calculating gradients.

- Symbolic Computation: Supports symbolic differentiation for optimization and gradient-based learning.

- Optimized Computation: Offers performance improvements using GPU acceleration and efficient use of memory.

- Flexible Expression: Handles multidimensional arrays and expressions for complex calculations.

- Legacy Framework: Although no longer actively developed, it laid the foundation for libraries like TensorFlow and Keras.

Applications:

- Deep Learning: Used to create neural networks for classification, regression, and other machine learning tasks.

- Scientific Computation: Efficiently solving mathematical problems that require symbolic and numerical computation.

- Computer Vision: Implementing vision-based deep learning algorithms for tasks like object recognition.

- Reinforcement Learning: Training models for decision-making tasks where actions lead to rewards.

PyCaret

Description:

PyCaret is an open-source, low-code machine learning library in Python that streamlines the process of building, training, and deploying machine learning models with minimal coding.

It simplifies the process of model building, experimentation, and deployment by automating tasks like data preprocessing, model training, and evaluation.

Features:

- Automated ML Workflow: Automates tasks like data preprocessing, feature engineering, and model selection.

- Model Selection and Tuning: Supports a wide range of models and hyperparameter optimization.

- Model Evaluation: Provides easy-to-interpret model evaluation metrics and visualizations.

- Ensemble Learning: Implements techniques like stacking, boosting, and bagging to improve model performance.

- Deployment: Easy model deployment with built-in functionality for exporting models.

Applications:

- Quick Prototyping: Fast experimentation and development of machine learning models without extensive coding.

- Model Deployment: Deploying trained models for real-world use cases with minimal effort.

- Business Intelligence: Building predictive models for business applications like sales forecasting or customer segmentation.

- Education: Used as a teaching tool for beginners to quickly get hands-on experience with machine learning.

Dask

Description:

Dask is a parallel computing library that allows you to scale your Python workflows from a single machine to large clusters. It is designed to extend the functionality of existing libraries like Pandas and NumPy to handle larger-than-memory datasets.

Features:

- Parallel Computing: Provides parallelism for Python code, allowing for scalable computation.

- Integration with Pandas/NumPy: Works seamlessly with familiar libraries to process larger datasets.

- Distributed Computing: Supports scaling across multiple machines or clusters for big data workflows.

- Dynamic Task Scheduling: Utilizes dynamic task scheduling for handling complex workflows.

- Flexible Deployment: Can be run on a single machine or in distributed computing environments (e.g., cloud or HPC).

Applications:

- Big Data Processing: Handling and processing large datasets that cannot fit in memory using Dask DataFrames or arrays.

- Machine Learning: Scalable machine learning algorithms and pipelines for large datasets.

- Scientific Computing: Parallel computation for scientific simulations and large-scale experiments.

- Business Analytics: Large-scale analysis of business data, such as customer behavior or sales data.

Pillow

Description:

Pillow is a Python Imaging Library (PIL) fork that adds image processing capabilities to Python. It supports opening, manipulating, and saving many different image formats.

Features:

- Image Manipulation: Provides tools to crop, rotate, resize, and apply filters to images.

- File Format Support: Supports numerous file formats like PNG, JPEG, BMP, GIF, and TIFF.

- Image Enhancement: Includes tools for adjusting brightness, contrast, sharpness, and other image properties.

- Drawing and Text: Allows drawing shapes, text, and other elements onto images.

- Image Conversion: Can convert between different image formats.

Applications:

- Image Processing: Editing and processing images for tasks like resizing, rotating, and applying effects.

- Web Development: Used for image handling in web applications, such as image uploads and transformations.

- Computer Vision: Preprocessing images for use in machine learning and computer vision models.

- Graphics Creation: Generating images programmatically, such as generating thumbnails or graphs.

Gensim

Description:

Gensim is an open-source Python library primarily used for topic modeling, document similarity analysis, and natural language processing (NLP). It is known for its scalability and efficiency when dealing with large text corpora.

Features:

- Topic Modeling: Implements algorithms like Latent Dirichlet Allocation (LDA) for discovering hidden topics in large text datasets.

- Document Similarity: Provides tools for measuring similarity between documents based on vector space models.

- Efficient Memory Usage: Optimized for handling large text datasets with minimal memory footprint.

- Vector Space Models: Supports Word2Vec, FastText, and other word embedding techniques for representing words and documents.

- Streaming and Incremental Learning: Can process data in chunks, making it suitable for online and real-time learning.

Applications:

- Text Classification: Categorizing large sets of documents or text into predefined topics.

- Document Similarity: Finding similar documents or clustering documents with related content.

- Recommendation Systems: Building content-based recommendation systems using text similarity.

- Information Retrieval: Implementing search engines to retrieve relevant documents from a large corpus.

H2O.ai

Description:

H2O.ai is an open-source platform for building machine learning models with a focus on large-scale data analysis. It supports distributed computing and is optimized for speed and scalability, particularly for enterprise-level machine learning applications.

Features:

- Scalable ML: Supports both distributed and parallel computing for large datasets.

- AutoML: Offers AutoML functionality for automating model selection, hyperparameter tuning, and training.

- Wide Range of Models: Includes algorithms for regression, classification, clustering, deep learning, and time-series forecasting.

- Explainability: Provides model interpretability tools to help understand the decision-making process of machine learning models.

- Integration: Supports integration with other platforms like Spark, Python, and R.

Applications:

- Predictive Analytics: Building models for forecasting, risk prediction, and other business use cases.

- Financial Services: Applied for fraud detection, credit scoring, and algorithmic trading.

- Marketing Analytics: Used for customer segmentation, churn prediction, and personalized marketing.

- Healthcare: Helps in predictive modeling for disease detection, patient outcomes, and drug discovery.

PyTorch

Description:

PyTorch is an open-source deep learning framework developed by Facebook’s AI Research lab, designed to facilitate the development of machine learning models with flexibility and efficiency. It provides flexible and dynamic computational graphs, making it popular among researchers and developers for building neural networks.

Features:

- Dynamic Computational Graphs: Allows real-time modifications of neural network architectures, making it flexible for research.

- GPU Acceleration: Provides native support for GPU computation, making it efficient for large-scale neural network training.

- Deep Learning Models: Supports various models including CNNs, RNNs, and reinforcement learning.

- Autograd: Automatically computes gradients, which is essential for backpropagation in deep learning.

- Integration with Python Libraries: Works seamlessly with popular Python libraries like NumPy, SciPy, and Pandas.

Applications:

- Deep Learning: Building and training deep neural networks for computer vision, NLP, and other domains.

- Research & Prototyping: Used extensively in research due to its flexibility and ease of experimentation.

- Reinforcement Learning: Training agents in environments for decision-making tasks like robotics or gaming.

- Natural Language Processing (NLP): Building models for tasks like sentiment analysis, machine translation, and text generation.

Bokeh

Description:

Bokeh is a Python interactive visualization library that is designed to create scalable, real-time interactive plots and dashboards. It is especially useful for web applications that require interactive visualizations.

Features:

- Interactive Visualizations: Provides tools for building interactive charts and plots with features like zoom, pan, and hover.

- Real-time Data Streaming: Supports real-time updates for live data visualization in dashboards.

- Wide Variety of Plots: Includes bar charts, line charts, scatter plots, heatmaps, and more.

- Customizable: Allows full customization of plots, including layout, color schemes, and interactivity.

- Web Integration: Plots can be embedded into web pages and dashboards with the help of HTML and JavaScript.

Applications:

- Data Dashboards: Creating real-time data dashboards for visualizing business, financial, or scientific metrics.

- Scientific Visualization: Used in research to display complex datasets interactively.

- Business Intelligence: Visualizing key performance indicators and business metrics for decision-making.

- Web Applications: Creating interactive data visualizations for online applications and services.

Desk

Description:

Desk is a relatively lesser-known library, often used in the context of organizing and managing tabular data in a more efficient and flexible manner. It is suitable for data manipulation and cleaning tasks in Python.

Features:

- Efficient Data Management: Helps with handling tabular data structures (like DataFrames) in a more efficient and flexible way.

- Data Transformation: Supports transformations such as filtering, aggregation, and reshaping of data.

- Integration: Easily integrates with other Python libraries like Pandas and NumPy for enhanced functionality.

- Optimized Performance: Designed to handle large datasets with minimal memory overhead.

- Simplified Syntax: Provides a more simplified and concise syntax compared to traditional data processing tools.

Applications:

- Data Manipulation: Used for cleaning and transforming raw datasets into structured and ready-to-use formats.

- Data Aggregation: Performing aggregation tasks like summing, averaging, and counting data across different columns.

- Data Preparation for Analysis: Preprocessing and preparing data for further analysis or machine learning tasks.

- Optimized Data Processing: Handling large-scale datasets efficiently with minimal resource consumption.

GeoPandas

Description:

GeoPandas is an open-source Python library that simplifies the manipulation and analysis of geospatial data, allowing users to work with geographic information in a seamless and efficient manner. It extends Pandas to provide support for geometries and spatial operations, making it easier to work with geographical datasets.

Features:

- Geospatial Data Handling: Allows for the manipulation and analysis of geometric objects like points, lines, and polygons.

- Shapefiles and GeoJSON Support: Supports reading and writing common geospatial file formats.

- Spatial Operations: Implements operations like buffering, intersection, union, and spatial joins.

- Mapping & Visualization: Integrates with libraries like Matplotlib to visualize geospatial data.

Applications:

- Geospatial Analysis: Performing spatial analysis for urban planning, environmental monitoring, and more.

- Mapping and Visualization: Creating maps to visualize geographical data.

Geographical Data Science: Used in fields like geography, geology, and environmental science to analyze location-based data

SymPy

Description:

SymPy is an open-source Python library designed for symbolic mathematics, providing tools for algebraic manipulation, equation solving, and symbolic computation. It provides tools for algebraic manipulation, differentiation, integration, solving equations, and much more.

Features:

- Symbolic Computation: Supports symbolic expressions and manipulations like differentiation, integration, and simplification.

- Equation Solving: Capable of solving algebraic, differential, and Diophantine equations.

- LaTeX Support: Can output results in LaTeX format for easy integration into scientific documents.

- Plotting: Includes basic plotting capabilities for visualizing mathematical expressions.

Applications:

- Mathematical Problem Solving: Used in education and research for solving symbolic math problems.

- Scientific Computing: Applied in areas requiring symbolic algebra, like physics and engineering.

- Computer Algebra Systems (CAS): Used in developing CAS software or in automating mathematical computations.

PySpark

Description:

PySpark is the Python API for Apache Spark, an open-source distributed computing system that enables fast processing of large-scale data across clusters.It is widely used for processing large datasets in parallel across a cluster of machines.

Features:

- Distributed Computing: Enables the parallel processing of large datasets across a cluster of machines.

- DataFrame API: Supports DataFrames for handling structured data, similar to Pandas but at a much larger scale.

- Integration with Hadoop: Works well with the Hadoop ecosystem, leveraging HDFS for storage.

- Machine Learning Support: Includes MLlib for scalable machine learning tasks on large datasets.

Applications:

- Big Data Processing: Processing large datasets in industries like finance, healthcare, and retail.

- Real-time Data Analytics: Used in real-time data streaming applications like event processing and monitoring.

- Distributed Machine Learning: Training machine learning models on large datasets across clusters.

Vaex

Description:

Vaex is a high-performance Python library for handling large datasets. It is designed to work with out-of-core datasets, meaning data that is too large to fit into memory.

Features:

- Out-of-core Data Processing: Processes datasets that do not fit into memory by using efficient algorithms.

- Fast Data Exploration: Supports fast exploration, filtering, and aggregation of large datasets.

- Visualization: Offers interactive visualizations for large datasets.

- Integration with Pandas: Works seamlessly with Pandas for enhanced functionality.

Applications:

- Big Data Analysis: Handling and analyzing large datasets that exceed system memory capacity.

- Data Visualization: Visualizing large datasets interactively in a scalable manner.

- Data Exploration: Fast and efficient exploration of massive datasets in data science and machine learning projects.

NetworkX

Description:

NetworkX is a Python library for the creation, manipulation, and study of complex networks (graphs). It allows for the analysis of structures like social networks, biological networks, and transportation systems.

Features:

- Graph Creation and Manipulation: Allows for creating, adding, and modifying graphs and networks.

- Algorithms: Implements algorithms for network analysis, such as shortest path, clustering, and centrality measures.

- Visualization: Provides basic graph visualization functionality through Matplotlib.

- Support for Various Types of Networks: Handles directed, undirected, weighted, and multigraphs.

Applications:

- Social Network Analysis (SNA) is the process of examining relationships, interactions, and connections within social structures to understand patterns, dynamics, and behaviors within networks of individuals, organizations, or systems.

- Biological Networks: Modeling and analyzing molecular or neural networks.

- Transport Networks: Studying and optimizing transportation systems, like traffic or logistics.

Joblib

Description:

Joblib is a Python library for serializing Python objects, especially large numerical arrays. It is commonly used for saving and loading machine learning models efficiently.

Features:

- Efficient Serialization: Provides fast and memory-efficient object serialization, especially for large arrays and data structures.

- Parallel Processing: Supports parallel processing for computationally expensive tasks.

- Model Persistence: Commonly used to save and load machine learning models in Scikit-learn.

Applications:

- Machine Learning: Saving and loading trained machine learning models.

- Data Science: Serialization of data and results for long-term storage or sharing across environments.

- Parallel Computing: Accelerating the execution of parallel tasks like model training.

FastAPI

Description:

FastAPI is a modern, high-performance web framework for building APIs with Python, designed to be fast, easy to use, and capable of handling large-scale applications efficiently.It is based on standard Python type hints and provides automatic validation, documentation, and high performance.

Features:

- Fast and Efficient: High performance, ideal for building fast APIs.

- Automatic Data Validation: Uses Python type hints for automatic request validation and serialization.

- Interactive Documentation: Provides automatic Swagger UI for interactive API documentation.

- Asynchronous Support: Supports asynchronous programming for handling multiple requests concurrently.

Applications:

- Web Development: Building RESTful APIs for web applications.

- Machine Learning APIs: Creating APIs for serving machine learning models in production.

- Microservices: Building microservices architecture for distributed systems.

OpenCV

Description:

OpenCV (Open Source Computer Vision Library) is an open-source library for computer vision and machine learning. It provides tools for processing images and videos and performing real-time computer vision tasks.

Features:

- Image and Video Processing: Tools for manipulation, filtering, and transforming images and video.

- Object Detection and Recognition: Includes pre-trained models and algorithms for detecting and recognizing objects in images and videos.

- Machine Learning Integration: Supports integration with machine learning algorithms for visual tasks.

- Real-Time Performance: Optimized for real-time processing of images and videos.

Applications:

- Computer Vision: Image processing, object detection, facial recognition, and more.

- Autonomous Vehicles: Used in self-driving car technology for object detection and navigation.

- Medical Imaging: Analyzing medical images for diagnostic purposes.

- Surveillance Systems: Monitoring and analyzing video feeds for security.

Pytz

Description:

Pytz is a Python library that allows for accurate and easy handling of time zones, enabling conversions between different time zones and ensuring correct time representations.It allows accurate and easy timezone calculations by using the IANA timezone database.

Features:

- Timezone Conversion: Easily converts timestamps between different time zones.

- Daylight Saving Time (DST): Handles Daylight Saving Time (DST) adjustments automatically.

- Standardized Time Handling: Provides consistent time handling across platforms.

- Integration with datetime: Works seamlessly with Python’s datetime module.

Applications:

- Time Zone Conversion: Converting timestamps between different time zones in global applications.

- Scheduling: Handling scheduling tasks in applications that require precise time zone management.

- Log Time Management: Managing timestamps in logs across different time zones.

Pandas-Profiling

Description:

Pandas-Profiling is an open-source Python library for generating exploratory data analysis (EDA) reports. It provides a detailed overview of a DataFrame with just a single line of code.

Features:

- Automated EDA: Generates detailed statistical summaries, correlations, and visualizations.

- Data Quality Checks: Provides insights into missing data, data types, and potential issues in the dataset.

- Customizable Reports: Generates HTML reports that can be easily customized or exported.

- Support for Large Datasets: Handles large datasets efficiently and provides detailed reports.

Applications:

- Data Exploration: Quickly exploring and understanding datasets in data science and machine learning projects.

- Data Cleaning: Identifying and addressing issues in datasets, such as missing values and outliers.

- Automated Reporting: Automatically generating reports for EDA during the initial stages of a project.

Yellowbrick

Description:

Yellowbrick is a machine learning visualization library that extends Scikit-learn. It provides visualizations for model selection, performance evaluation, and feature analysis.

Features:

- Model Performance Visualization: Includes visualizations like ROC curves, confusion matrices, and feature importance.

- Feature Analysis: Provides tools to visualize and analyze feature distributions and relationships.

- Interpretability: Helps in interpreting and understanding machine learning models.

- Integration with Scikit-learn: Works seamlessly with Scikit-learn models and workflows.

Applications:

- Model Evaluation: Visualizing model performance and diagnostics for better decision-making.

- Feature Selection: Understanding the impact of features on model performance.

- Data Visualization: Providing intuitive visual insights into machine learning workflows.

MLlib

Description:

MLlib is Apache Spark’s scalable machine learning library. It provides tools for machine learning algorithms, including classification, regression, clustering, and collaborative filtering.

Features:

- Scalable ML Algorithms: Implements scalable algorithms for classification, regression, clustering, and collaborative filtering.

- Distributed Computing: Utilizes Spark’s distributed computing power for parallel machine learning tasks.

- Pipeline Support: Supports machine learning pipelines for model training and validation.

- Integration with Spark: Works seamlessly within the Spark ecosystem for large-scale data analysis.

Applications:

- Big Data Machine Learning: Implementing machine learning models at scale for big data environments.

- Recommendation Systems: Used in collaborative filtering and building recommendation engines.

- Predictive Analytics: Applied in

1. What are data science libraries in Python?

Data science libraries in Python are pre-built collections of functions, tools, and methods that simplify complex tasks in data analysis, machine learning, statistical modeling, data visualization, and scientific computing. They help data scientists and analysts work more efficiently by providing ready-to-use solutions for common tasks.

2. How many libraries are there in Python for data science?

Python offers hundreds of libraries for data science, ranging from general-purpose libraries like Pandas and NumPy to specialized ones like TensorFlow, Scikit-learn, and PyTorch. Some sources estimate that there are over 200 Python libraries specifically designed for various data science tasks.

3. What are the top Python libraries for data science?

Some of the most widely-used Python libraries for data science include:

- NumPy (for numerical operations)

- Pandas (for data manipulation and analysis)

- Matplotlib and Seaborn (for data visualization)

- Scikit-learn (for machine learning)

- TensorFlow and PyTorch (for deep learning)

- SciPy (for scientific computing)

4. How do I install Python libraries for data science?

You can install Python libraries using the Python package manager pip. For example:

bash

CopyEdit

pip install numpy

pip install pandas

pip install scikit-learn

You can also install multiple libraries at once by listing them in a requirements file and running:

bash

CopyEdit

pip install -r requirements.txt

5. What is the role of NumPy in data science?

NumPy is fundamental for data science as it provides support for working with arrays and matrices. It allows efficient numerical computations and is the backbone of most numerical tasks in data science, from simple data processing to complex scientific calculations.